背景 最近某个系统在使用内部 S3 存储的时候发现内部 S3 功能不完善, 每个桶最大支持 100w 份文件(标准 S3 无上限), 而我们系统内的文件基本上都是大量的小份文件存储, 所以需要调研下是否有其他的解决方案, 查看网上部分解决方案后, 决定选用FastDFS测试一下.

简介 FastDFS 分为三种角色:

Cliet : 需要上传文件的客户端

Tracker Server : 负责管理 Storage 的节点, 负责分发请求, 可以集群化, 每个节点对等

Storage Server : 实际存储数据节点, 可以集群化, 每个节点对等, 存储数据的过程由 Tracker 分配

整体方案

使用 openresty+lua 转发文件到 fastdfs 存储, 支持任意客户端使用标准 HTTP 上传文件

实现自定义脚本定时删除过期文件

上传流程图 sequenceDiagram

participant C as Client

participant T as Tracker Server

participant S as Storage Server

S -->> T: 1. 定时向Tracker上传状态信息

C ->> T: 2. 发起上传请求

T ->> T: 3. 查询可用Storage

T -->> C: 4. 返回Storage信息(ip和端口)

C ->> S: 5. 上传文件(file content和metadata)

S ->> S: 6. 生成file_id

S ->> S: 7. 将上传内容写入磁盘

S -->> C: 8. 返回file_id

C ->> C: 9. 存储文件信息

下载流程图 sequenceDiagram

participant C as Client

participant T as Tracker Server

participant S as Storage Server

S -->> T: 1. 定时向Tracker上传状态信息

C ->> T: 2. 发起下载请求

T ->> T: 3. 查询可用Storage(检验同步状态)

T -->> C: 4. 返回Storage信息(ip和端口)

C ->> S: 5. file_id(组名, 路径, 文件名)

S ->> S: 6. 集群内查找文件

S -->> C: 7. 返回file_content

Docker 化

搭建一个 2 个tracker节点, 2 组storage服务, 每组storege里有 2 个存储节点, 一个nginx入口的Docker集群

这样搭建的好处是所有节点只需修改自己的配置就可以做到动态/自由扩容

tracker 镜像 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 FROM debian:stableRUN apt-get update && apt-get -y install git wget ca-certificates libpcre3 libpcre3-dev WORKDIR /home/tracker-server/var/tmp/ RUN git clone https://github.com/happyfish100/libfastcommon.git RUN wget https://github.com/happyfish100/fastdfs/archive/master.tar.gz -O fastdfs-master.tar.gz WORKDIR /home/tracker-server/var/tmp/libfastcommon RUN ./make.sh && ./make.sh install RUN export LD_LIBRARY_PATH=/usr/lib/ RUN ln -s /usr/lib/libfastcommon.so /usr/local /lib/libfastcommon.so WORKDIR /home/tracker-server/var/tmp/ RUN tar xzf fastdfs-master.tar.gz WORKDIR /home/tracker-server/var/tmp/fastdfs-master RUN ./make.sh && ./make.sh install COPY ./conf/common /home/tracker-server/var/conf/tracker COPY ./conf/tracker /home/tracker-server/var/conf/tracker RUN mkdir -p /home/tracker-server/var/log /tracker RUN mkdir -p /home/tracker-server/data/storage0 ENTRYPOINT /usr/bin/fdfs_trackerd /home/tracker-server/var/conf/tracker/tracker.conf & tail -f /dev/null

storage 镜像

注意, 这里的storege group的内容区别是根据storage.conf这个文件内容区分的, 需要多少个group, 创建多少个storage.conf就行, 或者在Docker启动的时候指定环境变量通过脚本注入也行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 FROM debian:stableRUN apt-get update && apt-get -y install git wget ca-certificates libpcre3 libpcre3-dev WORKDIR /home/storage-server/var/tmp/ RUN git clone https://github.com/happyfish100/libfastcommon.git RUN wget https://github.com/happyfish100/fastdfs/archive/master.tar.gz -O fastdfs-master.tar.gz RUN wget https://nginx.org/download/nginx-1.14.0.tar.gz -O nginx-1.14.0.tar RUN wget https://github.com/happyfish100/fastdfs-nginx-module/archive/master.tar.gz -O fastdfs-nginx-module-master.tar.gz WORKDIR /home/storage-server/var/tmp/libfastcommon RUN ./make.sh && ./make.sh install RUN export LD_LIBRARY_PATH=/usr/lib/ RUN ln -s /usr/lib/libfastcommon.so /usr/local /lib/libfastcommon.so WORKDIR /home/storage-server/var/tmp/ RUN tar xzf fastdfs-master.tar.gz RUN tar xzf nginx-1.14.0.tar RUN tar xzf fastdfs-nginx-module-master.tar.gz WORKDIR /home/storage-server/var/tmp/fastdfs-master RUN ./make.sh && ./make.sh install COPY ./conf/storage/storage.conf /home/storage-server/var/conf/storage/storage.conf COPY ./conf/storage/mime.types /home/storage-server/var/conf/fdfs/mime.types COPY ./conf/storage/anti-steal.jpg /home/storage-server/var/conf/fdfs/anti-steal.jpg COPY ./conf/storage/fastdfs-nginx-module.config /home/storage-server/var/tmp/fastdfs-nginx-module-master/src/config COPY ./conf/storage/fastdfs-nginx-module.mod_fastdfs.conf /home/storage-server/var/conf/fdfs/mod_fastdfs.conf COPY ./conf/common/http.conf /home/storage-server/var/conf/fdfs/http.conf COPY ./conf/common/nginx.conf /home/storage-server/var/conf/nginx/nginx.conf RUN mkdir -p /home/storage-server/var/log /storage RUN mkdir -p /home/storage-server/data/storage0 WORKDIR /home/storage-server/var/tmp/nginx-1.14.0 RUN ./configure --add-module=../fastdfs-nginx-module-master/src/ RUN make && make install RUN mkdir /home/storage-server/data/storage0/data RUN ln -s /home/storage-server/data/storage0/data /home/storage-server/data/storage0/data/M00 RUN cp /usr/local /nginx/conf/mime.types /home/storage-server/var/conf/nginx/mime.types ENTRYPOINT /usr/bin/fdfs_storaged /home/storage-server/var/conf/storage/storage.conf & /usr/local /nginx/sbin/nginx -c /home/storage-server/var/conf/nginx/nginx.conf -g "daemon off;"

定时清除过期文件脚本 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 0,5,10,15,20,25,30,35,40,45,50,55 * * * * root (/bin/bash -c "find /home/storage-server/data/storage0/data/ -type f -cmin +5 -exec /home/storage-server/running/fastdfs/crash-upload-file-server/conf/storage/delete.sh {} \;" >> /home/storage-server/var/log /storage/crontab.log 2>&1) group="group1" function delete_file file_name=$1 file_name=${file_name##*/data/} file_name=$group /M00/$file_name /usr/bin/fdfs_delete_file /home/storage-server/running/fastdfs/crash-upload-file-server/conf/common/client.conf $file_name echo "remove file $file_name " } delete_file $1

nginx 镜像 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 FROM debian:stableRUN apt-get update && apt-get -y install libreadline-dev libncurses5-dev libpcre3-dev libssl-dev perl make build-essential curl wget WORKDIR /home/nginx-server/var/tmp/ RUN wget https://openresty.org/download/openresty-1.13.6.2.tar.gz RUN tar -xzvf openresty-1.13.6.2.tar.gz WORKDIR /home/nginx-server/var/tmp/openresty-1.13.6.2/ RUN ./configure --prefix=/opt/openresty --with-luajit --without-http_redis2_module --with-http_iconv_module RUN make & make install COPY ./conf/nginx/nginx.conf /home/nginx-server/var/conf/openresty/nginx.conf WORKDIR /opt/openresty/nginx/sbin/ COPY ./lua /home/nginx-server/running/fastdfs/crash-upload-file-server/lua/ COPY ./index.html /home/nginx-server/running/fastdfs/crash-upload-file-server/ RUN mkdir -p /home/nginx-server/var/log /openresty/ ENTRYPOINT ./nginx -c /home/nginx-server/var/conf/openresty/nginx.conf -g "daemon off;"

docker compose 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 tracker-server: image: fastdfs-tracker tracker-server-2: image: fastdfs-tracker storage-server-group1-1: image: fastdfs-storage links: - tracker-server:tracker-server - tracker-server-2:tracker-server-2 storage-server-group1-2: image: fastdfs-storage links: - tracker-server:tracker-server - tracker-server-2:tracker-server-2 storage-server-group2-1: image: fastdfs-storage_2 links: - tracker-server:tracker-server - tracker-server-2:tracker-server-2 storage-server-group2-2: image: fastdfs-storage_2 links: - tracker-server:tracker-server - tracker-server-2:tracker-server-2 nginx_test: image: nginx_test ports: - '20022:22' - '20080:80' links: - tracker-server:tracker-server - tracker-server-2:tracker-server-2 - storage-server-group1-1:storage-server-group1-1 - storage-server-group1-2:storage-server-group1-2 - storage-server-group2-1:storage-server-group2-1 - storage-server-group2-2:storage-server-group2-2

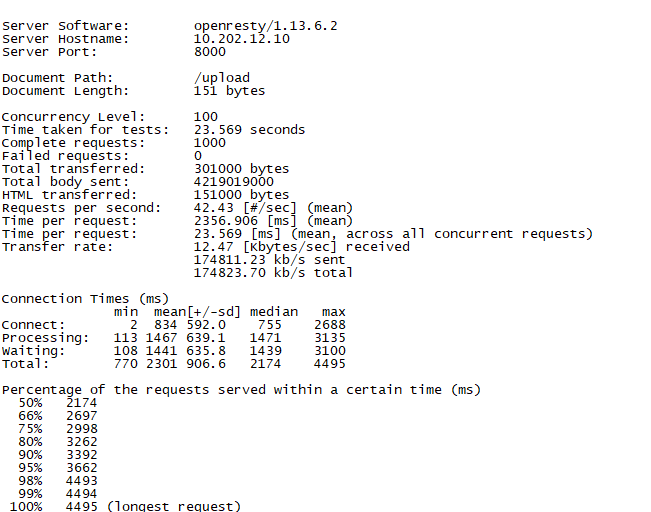

性能 打包好相关镜像后, 使用compose直接拉起, 做一下简单的性能压测, 总体来说性能是很不错的, 基本能达到单机峰值, 而且由于各角色的节点都是可以平行扩容的, 所以拓展起来也没有太大压力

压测环境: 2 tracker + 2*2 storage

文件大小: 平均 4M

QPS: 60

上传 - 90%响应时间: 3.5s

上传 - 最大请求时间: 5s

下载 - 最大响应时间: 1s

容器间网络带宽: 1Gb

其他方案 还看过一个其他方案Ceph, Ceph支持快存储, 但是使用文档较少, 所以最终还是选择了FastDFS